Five ways criminals are using AI

Artificial intelligence has brought a big boost in productivity—to the criminal underworld.

Generative AI provides a new, powerful tool kit that allows malicious actors to work far more efficiently and internationally than ever before, says Vincenzo Ciancaglini, a senior threat researcher at the security company Trend Micro.

Most criminals are “not living in some dark lair and plotting things,” says Ciancaglini. “Most of them are regular folks that carry on regular activities that require productivity as well.”

Last year saw the rise and fall of WormGPT, an AI language model built on top of an open-source model and trained on malware-related data, which was created to assist hackers and had no ethical rules or restrictions. But last summer, its creators announced they were shutting the model down after it started attracting media attention. Since then, cybercriminals have mostly stopped developing their own AI models. Instead, they are opting for tricks with existing tools that work reliably.

That’s because criminals want an easy life and quick gains, Ciancaglini explains. For any new technology to be worth the unknown risks associated with adopting it—for example, a higher risk of getting caught—it has to be better and bring higher rewards than what they’re currently using.

Here are five ways criminals are using AI now.

Phishing

The biggest use case for generative AI among criminals right now is phishing, which involves trying to trick people into revealing sensitive information that can be used for malicious purposes, says Mislav Balunović, an AI security researcher at ETH Zurich. Researchers have found that the rise of ChatGPT has been accompanied by a huge spike in the number of phishing emails.

Spam-generating services, such as GoMail Pro, have ChatGPT integrated into them, which allows criminal users to translate or improve the messages sent to victims, says Ciancaglini. OpenAI’s policies restrict people from using their products for illegal activities, but that is difficult to police in practice, because many innocent-sounding prompts could be used for malicious purposes too, says Ciancaglini.

OpenAI says it uses a mix of human reviewers and automated systems to identify and enforce against misuse of its models, and issues warnings, temporary suspensions and bans if users violate the company’s policies.

“We take the safety of our products seriously and are continually improving our safety measures based on how people use our products,” a spokesperson for OpenAI told us. “We are constantly working to make our models safer and more robust against abuse and jailbreaks, while also maintaining the models’ usefulness and task performance,” they added.

In a report from February, OpenAI said it had closed five accounts associated with state-affiliated malicous actors.

Before, so-called Nigerian prince scams, in which someone promises the victim a large sum of money in exchange for a small up-front payment, were relatively easy to spot because the English in the messages was clumsy and riddled with grammatical errors, Ciancaglini. says. Language models allow scammers to generate messages that sound like something a native speaker would have written.

“English speakers used to be relatively safe from non-English-speaking [criminals] because you could spot their messages,” Ciancaglini says. That’s not the case anymore.

Thanks to better AI translation, different criminal groups around the world can also communicate better with each other. The risk is that they could coordinate large-scale operations that span beyond their nations and target victims in other countries, says Ciancaglini.

Deepfake audio scams

Generative AI has allowed deepfake development to take a big leap forward, with synthetic images, videos, and audio looking and sounding more realistic than ever. This has not gone unnoticed by the criminal underworld.

Earlier this year, an employee in Hong Kong was reportedly scammed out of $25 million after cybercriminals used a deepfake of the company’s chief financial officer to convince the employee to transfer the money to the scammer’s account. “We’ve seen deepfakes finally being marketed in the underground,” says Ciancaglini. His team found people on platforms such as Telegram showing off their “portfolio” of deepfakes and selling their services for as little as $10 per image or $500 per minute of video. One of the most popular people for criminals to deepfake is Elon Musk, says Ciancaglini.

And while deepfake videos remain complicated to make and easier for humans to spot, that is not the case for audio deepfakes. They are cheap to make and require only a couple of seconds of someone’s voice—taken, for example, from social media—to generate something scarily convincing.

In the US, there have been high-profile cases where people have received distressing calls from loved ones saying they’ve been kidnapped and asking for money to be freed, only for the caller to turn out to be a scammer using a deepfake voice recording.

“People need to be aware that now these things are possible, and people need to be aware that now the Nigerian king doesn’t speak in broken English anymore,” says Ciancaglini. “People can call you with another voice, and they can put you in a very stressful situation,” he adds.

There are some for people to protect themselves, he says. Ciancaglini recommends agreeing on a regularly changing secret safe word between loved ones that could help confirm the identity of the person on the other end of the line.

“I password-protected my grandma,” he says.

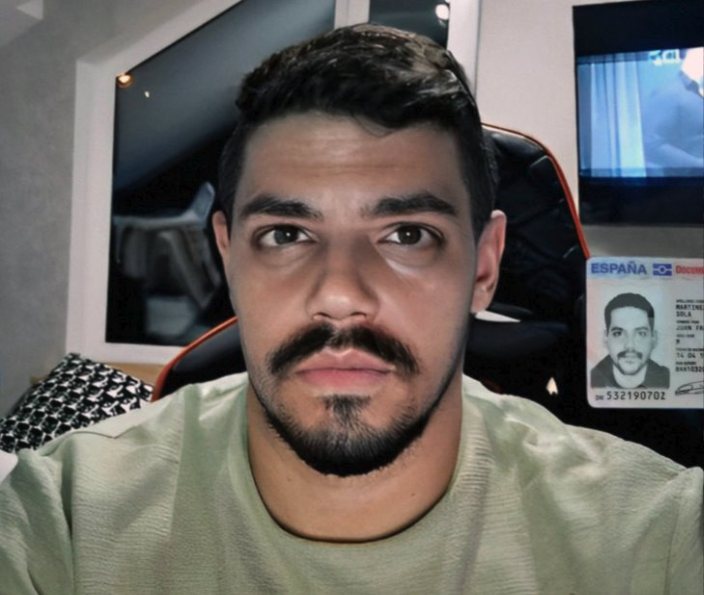

Bypassing identity checks

Another way criminals are using deepfakes is to bypass “know your customer” verification systems. Banks and cryptocurrency exchanges use these systems to verify that their customers are real people. They require new users to take a photo of themselves holding a physical identification document in front of a camera. But criminals have started selling apps on platforms such as Telegram that allow people to get around the requirement.

They work by offering a fake or stolen ID and imposing a deepfake image on top of a real person’s face to trick the verification system on an Android phone’s camera. Ciancaglini has found examples where people are offering these services for cryptocurrency website Binance for as little as $70.

“They are still fairly basic,” Ciancaglini says. The techniques they use are similar to Instagram filters, where someone else’s face is swapped for your own.

“What we can expect in the future is that [criminals] will use actual deepfakes … so that you can do more complex authentication,” he says.

Jailbreak-as-a-service

If you ask most AI systems how to make a bomb, you won’t get a useful response.

That’s because AI companies have put in place various safeguards to prevent their models from spewing harmful or dangerous information. Instead of building their own AI models without these safeguards, which is expensive, time-consuming, and difficult, cybercriminals have begun to embrace a new trend: jailbreak-as-a-service.

Most models come with rules around how they can be used. Jailbreaking allows users to manipulate the AI system to generate outputs that violate those policies—for example, to write code for ransomware or generate text that could be used in scam emails.

Services such as EscapeGPT and BlackhatGPT offer anonymized access to language-model APIs and jailbreaking prompts that update frequently. To fight back against this growing cottage industry, AI companies such as OpenAI and Google frequently have to plug security holes that could allow their models to be abused.

Jailbreaking services use different tricks to break through safety mechanisms, such as posing hypothetical questions or asking questions in foreign languages. There is a constant cat-and-mouse game between AI companies trying to prevent their models from misbehaving and malicious actors coming up with ever more creative jailbreaking prompts.

These services are hitting the sweet spot for criminals, says Ciancaglini.

“Keeping up with jailbreaks is a tedious activity. You come up with a new one, then you need to test it, then it’s going to work for a couple of weeks, and then Open AI updates their model,” he adds. “Jailbreaking is a super-interesting service for criminals.”

Doxxing and surveillance

AI language models are a perfect tool for not only phishing but for doxxing (revealing private, identifying information about someone online), says Balunović. This is because AI language models are trained on vast amounts of internet data, including personal data, and can deduce where, for example, someone might be located.

As an example of how this works, you could ask a chatbot to pretend to be a private investigator with experience in profiling. Then you could ask it to analyze text the victim has written, and infer personal information from small clues in that text—for example, their age based on when they went to high school, or where they live based on landmarks they mention on their commute. The more information there is about them on the internet, the more vulnerable they are to being identified.

Balunović was part of a team of researchers that found late last year that large language models, such as GPT-4, Llama 2, and Claude, are able to infer sensitive information such as people’s ethnicity, location, and occupation purely from mundane conversations with a chatbot. In theory, anyone with access to these models could use them this way.

Since their paper came out, new services that exploit this feature of language models have emerged.

While the existence of these services doesn’t indicate criminal activity, it points out the new capabilities malicious actors could get their hands on. And if regular people can build surveillance tools like this, state actors probably have far better systems, Balunović says.

“The only way for us to prevent these things is to work on defenses,” he says.

Companies should invest in data protection and security, he adds.

For individuals, increased awareness is key. People should think twice about what they share online and decide whether they are comfortable with having their personal details being used in language models, Balunović says.